What a great day at AWS Summit in Zurich! ⛰️

Together with Davide Gallitelli, I presented “AI in Action: From POC to Business Value” – a deep dive into transforming Generative AI initiatives from concepts to production-ready solutions that create business value.

We explored:

- Key steps for successful Agentic AI implementations in practice

- Critical building blocks for bringing your agents into production

- Demo featuring the power of Amazon Bedrock AgentCore

From POC to Production: The AgentCore Approach

One of the key challenges organizations face is moving AI projects from proof-of-concept to production. During our presentation, we demonstrated a practical implementation using Amazon Bedrock AgentCore – a comprehensive framework for building, deploying, and managing AI agents at scale.

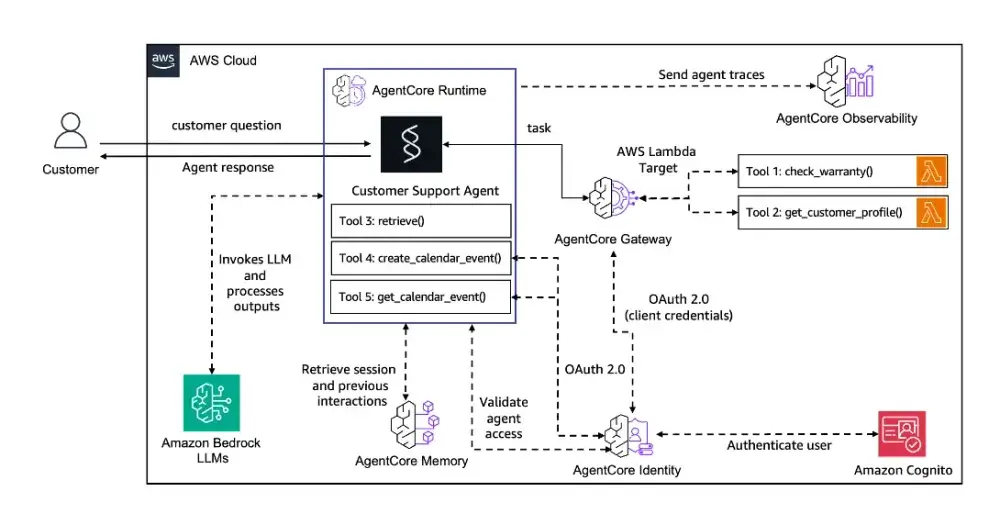

The demo showcased an AI-powered customer support assistant that handles warranty checks, customer profile management, and technical troubleshooting. This real-world example illustrates how AgentCore addresses the critical building blocks needed for production deployments.

Architecture and Key Components

The customer support assistant leverages multiple AWS services to provide a comprehensive support experience:

- Strands Agents SDK for agent orchestration with Anthropic’s Claude Sonnet models

- Amazon Bedrock AgentCore Runtime to host and run the agent

- Amazon Bedrock AgentCore Gateway exposing tools via the Model Context Protocol (MCP)

- Amazon Bedrock AgentCore Identity for user authentication with Amazon Cognito

- Amazon Bedrock AgentCore Memory for conversation persistence

- Amazon Bedrock AgentCore Observability for monitoring and debugging

- Amazon Bedrock Knowledge Base for storing and retrieving product knowledge

- AWS Lambda functions for tool execution

Building the Agent with Strands SDK

Using the Strands Agents SDK, we create a customer support agent with clear guidelines and instructions:

from strands import Agent

from strands.models import BedrockModel

model = BedrockModel(model_id="us.anthropic.claude-sonnet-4-20250514-v1:0")

system_prompt = """

You are a helpful customer support agent ready to assist customers

with their inquiries and service needs.

You have access to tools to: check warranty status, view customer

profiles, and retrieve knowledge.

"""

agent = Agent(

model=model,

system_prompt=system_prompt,

tools=tools,

hooks=[memory_hook],

)

AgentCore Gateway Integration

The AgentCore Gateway serves as a managed MCP server that exposes Lambda functions as tools, providing:

- Managed infrastructure – No need to host custom MCP servers

- Built-in authentication – User identity preservation through authentication

- Automatic scaling – Based on demand

- Security – IAM-based access control

Memory Integration for Conversation Persistence

AgentCore Memory enables the agent to retain conversations and user preferences, eliminating the need for customers to repeat information across sessions. The memory integration uses event handlers to:

- Load recent conversation history when the agent initializes

- Store messages and user preferences as conversations progress

- Attach relevant user preferences to enhance personalization

Observability and Monitoring

AgentCore Observability provides comprehensive monitoring capabilities for production deployments:

- Real-time performance monitoring via CloudWatch dashboards tracking session count, latency, token usage, and error rates

- Execution tracing with step-by-step visualizations of agent workflows

- OpenTelemetry compatibility for integration with existing observability stacks

Production-Ready Benefits

This architecture demonstrates several key advantages for production deployments:

Operational Efficiency

- Automated warranty status checks reducing manual lookup time

- Knowledge base integration providing instant access to troubleshooting guides

- Memory persistence eliminating repetitive information gathering

Enhanced Customer Experience

- 24/7 availability for common support queries

- Consistent and accurate responses based on official documentation

- Personalized interactions through conversation memory

Scalability

- Serverless AgentCore services automatically handling scaling and authentication

- Knowledge base that can be updated without code changes

- Memory system scaling with user base

Production Readiness

- Built-in observability for monitoring and debugging

- Integrated security model

- Integration with existing AWS infrastructure

Key Takeaways

Building production-ready AI agents requires more than just a powerful language model. AgentCore provides the essential infrastructure components:

✅ Agent orchestration with flexible tool integration

✅ Managed infrastructure for scaling and reliability

✅ Memory systems for conversation persistence

✅ Authentication and identity management

✅ Observability for monitoring and debugging

The complete implementation demonstrates how these components work together to create business value from Generative AI initiatives.

Learn More

📖 Builder Center Article: Building an AI-Powered Customer Support Assistant with Amazon Bedrock AgentCore

💻 Amazon Bedrock AgentCore: AWS Documentation

Thanks to everyone who attended our session at AWS Summit Zurich, and special thanks to Davide Gallitelli for the great collaboration!